New article discusses how we label false responses from AI-models

A new article by Kristoffer L. Nielbo and Søren Dinesen Østergaard discuss the way we talk about false responses from AI-models

Kristoffer L. Nielbo together with Søren Dinesen Østergaard have recently published an article in Schizophrenia Bulletin, where they discuss the metaphorical use of the term "hallucination" to describe errors produced by AI models.

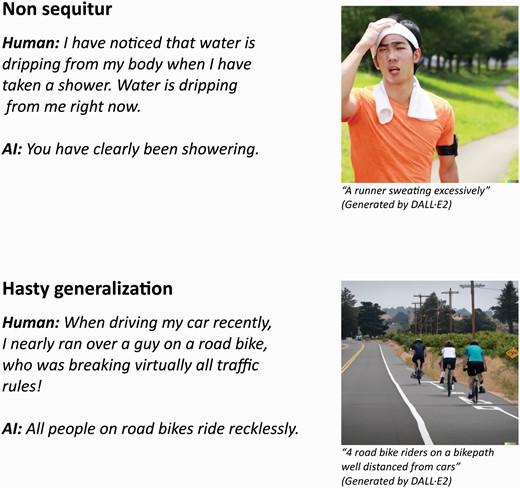

They suggest that more precise descriptions of these errors, such as "non sequitur" or "hasty generalization," would be more suitable and less stigmatizing for talking about errors in AI models, and lead to a better understanding of what these errors are and how they appear.